How do I deploy my Symfony API - Part 4 - Deploy

This is the forth article from a series of blog posts on how to deploy a symfony PHP based application to a docker swarm cluster hosted on Amazon EC2 instances. This post focuses on the final and last step, the deploy.

This is the forth post from a series of posts that will describe the whole deploy process from development to production.

The first article is available here,

the second here and

the third here.

After covering the steps 1-3 and having prepared our infrastructure, we can see how to deploy our application to production. Almost the same approach can be used to deploy not only to production but also to test environments.

Workflow

Different "git push" operation should trigger different actions.

Just as example a push to master should trigger a deploy to production, while other branches may trigger a deploy to

a test environment, or not trigger deploys at all.

I've used Circe CI Workflows to manage this set of decisions.

Workflows are just another section from the same .circleci/config.yml file and here they are:

workflows:

version: 2

build_and_deploy-workflow:

jobs:

- build

- deploy_to_live:

requires:

- deploy_to_live_approval

filters:

branches:

only:

- master

- deploy_to_live_approval:

type: approval

requires:

- build

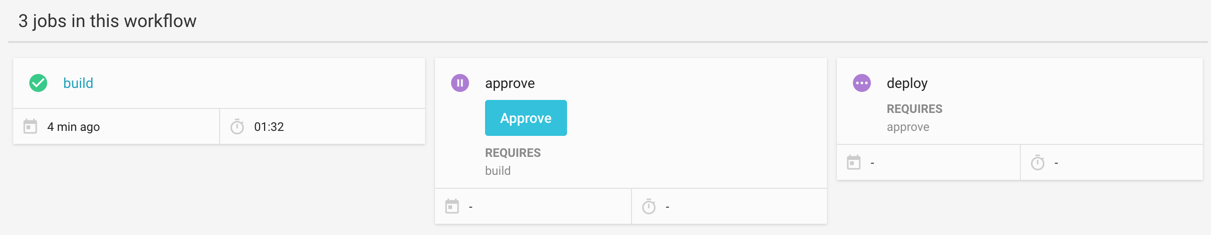

In this workflow configuration we have 3 jobs.

build: is the main job; the one explained in the second article and responsible for pushing the images to the docker registry.deploy_to_live: is the job for the deploy to live (will talk about it in a moment); this job will be executed only for the branch namedmasterand

before running requires a successful completion of a job calleddeploy_to_live_approval.deploy_to_live_approval: is an "type = approval" job, and its completion is just a button on the Circle CI web interface; this allow us to effectively decide if deploying to live or not.

The node build_and_deploy-workflow is just a workflow "name",

CircleCi allows multiple workflows for the same project, but will not handle this topic now.

The deploy job

As said, there is a job named deploy_to_live that is responsible for the live deploy.

The job is just another portion of the same .circleci/config.yml file we saw in this and previous articles.

version: 2

executorType: machine

jobs:

build: # this is the job that pushes the images to the registry

# ...

deploy_to_live: # this is the job that effectively deploys to live

working_directory: ~/my_ap

environment:

- DOCKER_HOST: "tcp://myapp-manager.yyy.local:2375"

steps:

- *helpers_system_basic

- *helpers_docker

- run: sudo apt-get -qq -y install openvpn

- checkout

- add_ssh_keys:

fingerprints:

- "af:83:39:00:ad:af:83:39:00:ad:af:83:39:00:ad:99" # import VPN private key

- run:

name: Connect to VPN

command: |

sudo openvpn --daemon --cd .circleci/vpn-live --config my-vpn-config.ovpn

while ! (echo "$DOCKER_HOST" | sed 's/tcp:\/\///'|sed 's/:/ /' |xargs nc -w 2) ; do sleep 1; done

- deploy:

name: Deploy

command: |

docker login -u $DOCKER_HUB_USERNAME -p $DOCKER_HUB_PASS

docker stack deploy live --compose-file=docker-compose.live.yml --with-registry-auth

Step by step

Let's analyze step-by-step the build process by looking in detail at the .circleci/config.yml file.

Preparation

environment:

- DOCKER_HOST: "tcp://myapp-manager.yyy.local:2375"`

- *helpers_system_basic # use basic system configurations helper

- *helpers_docker # use basic docker installation helper

- run: sudo apt-get -qq -y install openvpn # install openvpn client

- checkout # checkout the source code

Again, as it was in the build, this part of the configuration file is just about setting up some basics for the

deploy environment. The only difference with the build job is the openvpn package installation because it will be

necessary to connect to the docker swarm manager that will run the deploy.

We also export an environment variable (DOCKER_HOST) for the docker daemon targeting our docker swarm cluster manager.

Credentials

- add_ssh_keys:

fingerprints:

- "af:83:39:00:ad:af:83:39:00:ad:af:83:39:00:ad:99"

This snipped is about importing the "af:83:39:00:ad:af:83:39:00:ad:af:83:39:00:ad:99" private key into the environment.

The key needs to be placed into the CircleCI web interface before. The key will be available at

/home/circleci/.ssh/id_rsa_af833900adaf833900adaf833900ad99.

VPN

- run:

name: Connect to VPN

command: |

sudo openvpn --daemon --cd .circleci/vpn-live --config my-vpn-config.ovpn

while ! (echo "$DOCKER_HOST" | sed 's/tcp:\/\///'|sed 's/:/ /' |xargs nc -w 2) ; do sleep 1; done

This will connect to the VPN and will wait till the connection to

myapp-manager.yyy.local on the port 2377 does not become available.

The folder .circleci/vpn-live contains the OpenVPN configuration files necessary for the connection to the VPN.

I will not tackle this topic as it is a completely different subject.

The deploy

- deploy:

name: Deploy

command: |

docker login -u $DOCKER_HUB_USERNAME -p $DOCKER_HUB_PASS

docker stack deploy live --compose-file=docker-compose.live.yml --with-registry-auth

This is obviously the most important part, the deploy to the cluster.

We login to the docker registry and later running docker stack deploy effectively deploys the application.

The omitted part: most probably you application needs some credentials for the database connection,

api keys and many other configuration services.

A good way to handle credentials can be using done using the

docker secrets management, but in this application

I've used symfony environment variables

to configure the application, placed them in the CircleCI web interface, and exported them right before running

docker stack deploy.

export DB_USER="$LIVE_DB_USER"

export DB_PWD="$LIVE_DB_PWD"

docker stack deploy live --compose-file=docker-compose.live.yml --with-registry-auth

Alternatively is possible to place the exports in a dedicated file (exports_live_vars.sh as example).

source exports_live_vars.sh

docker stack deploy live --compose-file=docker-compose.live.yml --with-registry-auth

You (reader) should have noticed that I've used a different docker-compose file, nameddocker-compose.live.yml for

the deploy.

The docker-compose.live.yml

# docker-compose.live.yml

version: '3.3'

services:

php:

image: goetas/api-php:master

deploy:

replicas: 6

update_config:

parallelism: 2

delay: 30s

restart_policy:

condition: on-failure

www:

image: goetas/api-nginx:master

deploy:

replicas: 6

update_config:

parallelism: 2

delay: 30s

restart_policy:

condition: on-failure

ports:

- "80:80"

This docker-compose.live.yml is much simpler than the docker-compose.yml file used for development.

Whe www container binds the port 80 so the web server is exposed.

The rest of the file defines only the image names to download from the registry and the section deploy

used to configure the rolling updates policy

(this article gives a good overview

of what a rolling update is).

The policies defined by deploy are:

- Deploy 4 containers for each service. Hopefully they will be uniformly distributed across the cluster,

but this is not guaranteed. Docker offers a

placementconfiguration option to instruct the scheduler on how to distribute containers across the cluster. - When updating the containers (as example a second deploy) update two containers and wait for 30 seconds before

updating other two containers.

Updating the container here means: download latest image, stop and remove old container, create and start the container using the new image. - Restart the containers if they fail. In case of "weird" errors that should not happen anyway but they will.

Conclusion

This article combines the results achieved in the previous three articles and show how is possible to setup a relatively sophisticated and expandable continuous delivery pipeline.

The application has been developed, (tested!), built, (re-tested!) and deployed.

Obviously there are as usual many topics that require attention and improvements, as:

- How to run migrations for database schema changes?

- How to maximize the server resource usage?

- How to secure the deploy better than a VPN?

- What about

healthchecks?

In the next article will make a summary of this 4 blog posts and will try to answer to some of the open questions by showing some of the improvements implemented in the project that were not easy to pace in the blog post stories.